Abstract

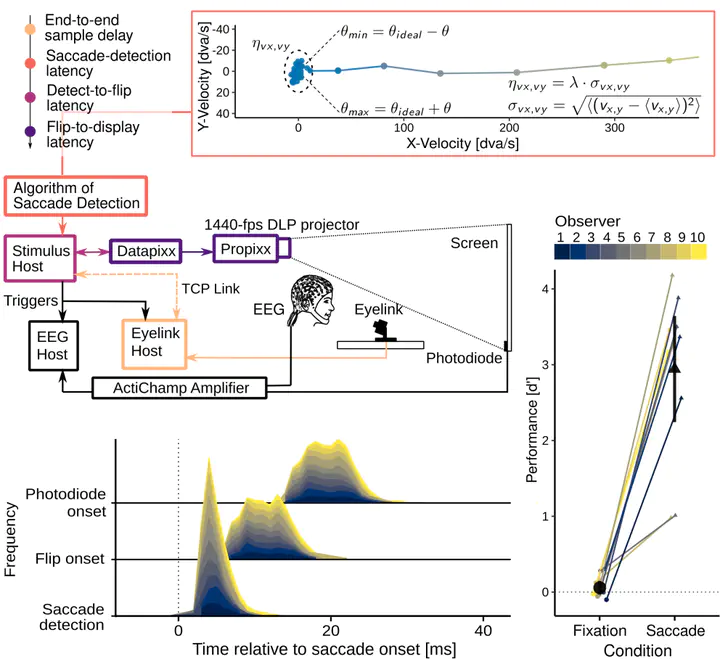

To investigate visual perception around the time of eye movements, vision scientists manipulate stimuli contingent upon the onset of a saccade. For these experimental paradigms, timing is especially crucial, because saccade offset imposes a deadline on the display change. Although efficient online saccade detection can greatly improve timing, most algorithms rely on spatial-boundary techniques or absolute-velocity thresholds, which both suffer from weaknesses – late detections and false alarms, respectively. We propose an adaptive, velocity-based algorithm for online saccade detection that surpasses both standard techniques in speed and accuracy and allows the user to freely define the detection criteria. Inspired by the Engbert–Kliegl algorithm for microsaccade detection, our algorithm computes two-dimensional velocity thresholds from variance in the preceding fixation samples, while compensating for noisy or missing data samples. An optional direction criterion limits detection to the instructed saccade direction, further increasing robustness. We validated the algorithm by simulating its performance on a large saccade dataset and found that high detection accuracy (false-alarm rates below 1%) could be achieved with detection latencies of only 3 ms. High accuracy was maintained even under simulated high-noise conditions. To demonstrate that purely intrasaccadic presentations are technically feasible, we devised an experimental test in which a Gabor patch drifted at saccadic peak velocities. Whereas this stimulus was invisible when presented during fixation, observers reliably detected it during saccades. Photodiode measurements verified that – including all system delays – the stimuli were physically displayed on average 20 ms after saccade onset. Thus, the proposed algorithm provides a valuable tool for gaze-contingent paradigms.

To study intrasaccadic vision, we need stimulus manipulations that occur strictly during saccades. Due to the brief durations of saccades, this can prove a difficult task, as various system latencies (eye tracker, refresh cycle, video delay, and some more) have to be considered. While most of these delays are hardware-dependent, one opportunity to alleviate timing issues in gaze-contingent eye-tracking paradigms is applying an efficient online saccade detection! In this paper we described such an algorithm, validated it in simulations and experiments, and made it publicly available) so that it can be used with a range of different programming languages.