Lawful kinematics link eye movements to the limits of high-speed perception

Abstract

Perception relies on active sampling of the environment. What part of the physical world can be sensed is limited by biophysical constraints of sensory systems, but might be further constrained by the kinematic bounds of the motor actions that acquire sensory information. We tested this fundamental idea for humans’ fastest and most frequent behavior, saccadic eye movements, which entails retinal motion that commonly escapes visual awareness. We discover that the visibility of a high-speed stimulus, presented during fixation, is predicted by the lawful sensorimotor contingencies that saccades routinely impose on the retina, reflecting even distinctive variability between observers’ movements. Our results suggest that the visual systems’ functional and implementational properties are best understood in the context of movement kinematics that impact its sensory surface.

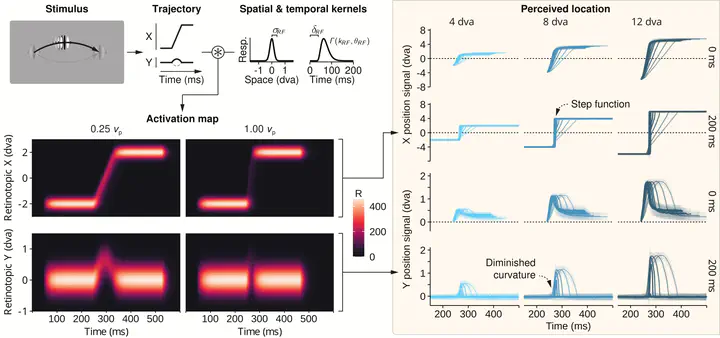

In this paper, we report a mysterious finding. When detecting rapid stimulus motion of a Gabor stimulus oriented orthogonal to its motion direction, it is not simply its absolute velocity that determines its visibility, but a combination of velocity and movement distance. Curiously, the specific combination that predicts velocity thresholds follows an oculomotor law - the main sequence, an exponential function describing the increase of saccadic velocity with growing amplitude. My proud contributions to this paper feature the masking experiment, the modeling of saccade trajectories which ultimately revealed significant correlations between saccade metrics and velocity thresholds, and most importantly, the early vision model to predict the measured psychophysical data - without fitting and based only on the trajectory of the stimulus. Finally, I evaluated the timing of the motion stimulus using photometric measurements using the LM03 lightmeter.